What Is Grid Computing?

Grid computing is a distributed computing paradigm that enables the sharing of resources across multiple organizations and locations. It allows for the seamless integration of resources, such as compute power, storage, and data, to be shared and used as if they were local to the user. The goal of grid computing is to provide a unified and coordinated access to distributed resources in order to solve complex problems that require large amounts of computational power and data storage.

Another Definition of Grid Computing:

Grid computing is a distributed computing paradigm that emerged in the late 1990s as a response to the growing need for large-scale computing resources to solve complex problems. The concept of grid computing is to share resources, such as computational power and data storage, across multiple organizations and locations in order to solve problems that cannot be tackled by a single computer or organization.

The fundamentals of Grid Computing:

Resource sharing:

Grid computing allows for the sharing of resources, such as compute power, storage, and data, across multiple organizations and locations. This enables the efficient use of resources and allows for the solving of problems that require large amounts of computational power and data storage.

Virtualization:

Grid computing uses virtualization technology to create a virtualized environment in which resources can be shared and used as if they were local to the user. This enables the efficient use of resources and allows for the solving of problems that require large amounts of computational power and data storage.

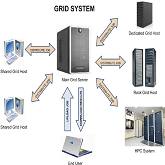

Coordination:

Grid computing uses coordination mechanisms, such as job schedulers and resource managers, to coordinate the sharing and use of resources across multiple organizations and locations. This enables the efficient use of resources and allows for the solving of problems that require large amounts of computational power and data storage.

Security:

Grid computing uses security mechanisms, such as authentication and authorization, to ensure the secure sharing and use of resources across multiple organizations and locations.

Monitoring and Management:

Grid computing uses monitoring and management tools to monitor the performance and usage of resources and to manage the allocation and scheduling of resources.

Interoperability:

Grid computing allows for the integration of resources from different sources, such as different hardware and software platforms, and enables the seamless sharing and use of these resources.

Past, Present and Future Of Grid Computing

The past:

The origins of grid computing can be traced back to the early days of high-performance computing (HPC) when scientists and researchers needed to share resources to solve complex problems. In the 1990s, the National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory (LBNL) and the San Diego Supercomputer Center (SDSC) at the University of California, San Diego (UCSD) began experimenting with the sharing of computing resources across multiple organizations. This led to the development of the first grid computing systems, such as the Globus Toolkit, which was developed at the University of Chicago and Argonne National Laboratory, and the Condor project, which was developed at the University of Wisconsin-Madison.

The present:

Today, grid computing is widely adopted and being used in various fields, such as scientific research, business, healthcare, and government. Many organizations and companies use grid computing to improve efficiency and gain access to powerful computing resources. Grid computing has also evolved to include the sharing of storage and data resources, in addition to computational power.

The future:

Grid computing is expected to continue to evolve and expand in the future. With the increasing amount of data being generated and the growing need for powerful computing resources to analyze this data, the use of grid computing is expected to continue to grow in the field of data science and artificial intelligence. Additionally, the growth of IoT and edge computing is also expected to increase the need for distributed computing resources and grid computing is expected to play a crucial role in this field.

In the future, it will be important to address the social and economic issues related to grid computing such as security, privacy, and access to resources, as well as to create a sustainable business model for grid computing, that allows for the sharing of resources in a cost-effective and efficient way.

conclusion

In conclusion, grid computing is a distributed computing paradigm that allows for the sharing of resources across multiple organizations and locations, which enables the efficient use of resources and allows for the solving of complex problems that require large amounts of computational power and data storage.

Thanks for visiting Idreeselahi.in, hope this article regarding Grid Computing was helpful. If you are having any kind of issue or you have any query please feel free to contact us. https://idreeselahi.in-contact-us

One thought on “FUNDAMENTALS OF GRID COMPUTING The Grid – Past, Present and Future(2023)”